The rate at which medicine has been progressing is quite remarkable with new discoveries and therapies emerging every day. The medicine the world knows today would be unrecognizable twenty years ago, and the medicine twenty years in the future is sure to be unrecognizable to us today. Research bears a great deal of responsibility, being intrinsically linked to the progression of medicine, from helping battle diseases and disorders to helping improve patients’ quality of life. From learning how to reverse a somatic cell into a stem cell to creating drugs that help the patient’s own immune system fight off their cancer, medical research has revolutionized medicine. However, the culture surrounding medical research has produced a toxic environment where bad science is being emphasized over good science.

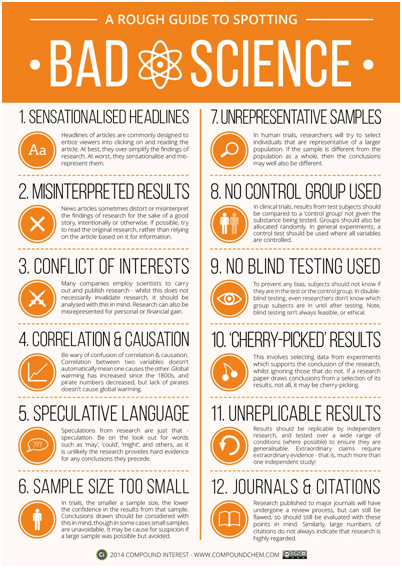

Spotting Bad Science

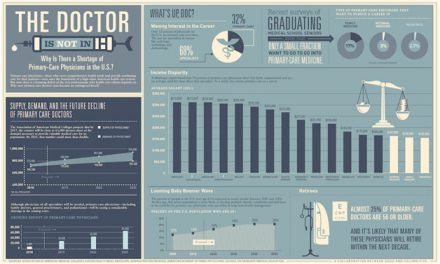

The NIH, or National Institutes of Health, spends nearly $39.2 billion on medical research each year with over 80% being earmarked for grant funding, for researchers to apply for. The majority of grants the NIH awards are R01-equivalent grants that had a success rate of slightly under 21.7% in 2018. Considering that grant funding can determine whether a lab survives or not, this percentage is not terribly high. When determining whether to award a grant or not, the NIH generally looks to the researcher’s previous publications. Combined with the fact that retaining one’s academic position at most institutions is based on consistently authoring academic articles, the result has been a “public or perish” culture that encourages poor research practices.

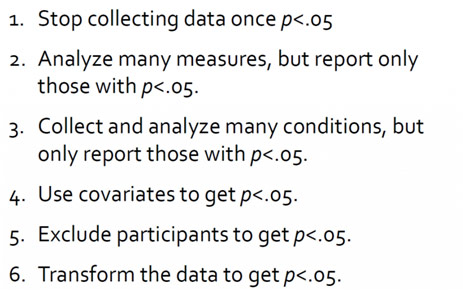

One such practice is known as p-hacking where the data or analyses are manipulated to falsely get significant p-values, which, to summarize, is a statistical measure to see if your results are real or can be due to chance. Imagine a researcher conducts a large experiment with numerous variables being tracked, like an experiment testing if a drug reduces a fever that also monitors dozens of other symptoms for potential side effects. The researcher’s original hypothesis (drug reduces fever) gives nonsignificant results, but the researcher goes back to the data and starts conducting all sorts of statistical tests between various variables to cherry-pick something that is significant. Given this unfortunately all-too-common situation, it is more than likely that a researcher finds some correlation between two variables that, by chance, happens to be statistically significant. The issue here is that the researchers make hypotheses after results are known (HARKing), which is the opposite of how good science should work.

Certainly, academic journals share some of the blame for practices such as p-hacking as journals tend to prefer results that are statistically significant over those that are not. It is the difference between saying “drinking a cup of coffee a day is linked to increased cancer risk” and “drinking a cup of coffee a day may or may not be linked to increased cancer risk.” The former seems more groundbreaking than the latter, so it is more likely to get published. Imagine that twenty research groups conducted this study and nineteen found no correlation but one found significance by chance. In fact, you could expect this to happen considering that the widely accepted marker for significance is 0.05, or 5%. While many people argue that our standard for significance is too low a bar, the larger issue here is perhaps that the only study to get published might be that twentieth one. With “negative” results rarely being published, we tend to not have the full picture, which helps give rise to false science. Considering that only 4% of a random sample of 1000 medical research abstracts had nonsignificant p-values reported, the issue is quite pervasive.

Steps to P-Hacking

P-hacking and other poor research methods are contributing to a harrowing replication crisis in science: numerous foundational studies have not been able to be replicated by independent groups, suggesting that they are perhaps downright wrong. In a landmark 2005 study, Dr. John Ioannidis found that only 44% of the 45 most “highly cited original clinical research studies” that found effective treatments successfully replicated. Similarly, when the biotechnology company Amgen tried to replicate over 50 important cancer studies, they found that only 6 replicated. In medicine, the downstream effects of this type of bad science can prove disastrous, contributing to devastating falsehoods that infiltrate the public consciousness and prove near impossible to deracinate such as “vaccines cause autism.”

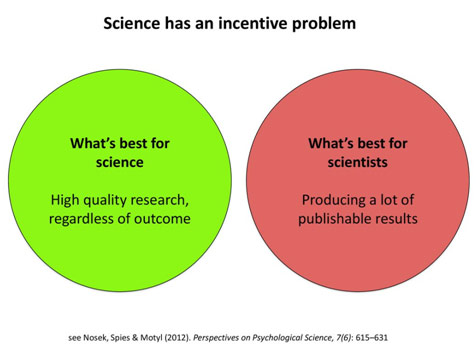

In a video by Coffee Break, Dr. Paul Smaldino, assistant professor of cognitive science at UC Merced, explains the replication crisis using Darwin’s theory of natural selection. Because it is difficult to judge the quality of research, academic institutions “select” for a proxy to research quality, such as a high number of publications. Dishearteningly, as a result, those who do less rigorous research to put as many papers out as possible tend to survive and spread their methodologies to their students, the next generation. Meanwhile, scientists who are careful and methodical find themselves out of a job because they are simply not productive enough. The cost of medical research in today’s day and age is the loss of good scientists.

Incentive Mismatch in Science

The goal should thus be to instead realign the incentives of research with the incentives of researchers, to reward getting the research right rather than just getting publishable results. Realigning the incentives starts with transforming the culture of science, which means enlisting the help of academic institutions, journals, and granting agencies. Instead of always looking for “novel” discoveries, we need to publish more replicational studies. We need to start rewarding being transparent and rigorous with science, having researchers disclose their raw data. The replication crisis is demoralizing because it questions the foundations of science, but awareness about it can allow us to make reforms to research that will transform the field for the better.

REFERENCES

https://www.nih.gov/about-nih/what-we-do/budget

https://www.ncbi.nlm.nih.gov/pubmed/16014596

https://www.castoredc.com/blog/replication-crisis-medical-research/