I had the great pleasure of going to the 15th annual meeting of the Academic Surgical Congress (ASC) in Orlando, Florida to present my research “Cardiothoracic Surgeons Who Attended a Top-Tier Medical School Exhibit Greater Academic Productivity” as an oral presentation. With a team at the Stanford Cardiothoracic Surgery Department, we made a database of all academic cardiothoracic surgeons in the US and found that attending a top-25 U.S. News & World Report medical school is correlated with greater academic productivity, but these differences can be ameliorated by publishing at least three papers during surgical training. One of the implications of our study was that medical schools may consider adding research opportunities to stimulate early academic productivity and that training institution may consider enhancing their research curriculum to support trainees who may not have the same early research exposure as others. However, in this first part of a two-part series, I want to discuss some of the sessions regarding machine learning that I attended at ASC 2020.

There was a lunch session on “Artificial Intelligence (AI), Machine Learning & Surgical Science: Reality vs. Hype” that was a fascinating presentation of the many sides of the debate around AI. Dr. Majed Hechi from Massachusetts General specifically spoke about a new era of decision making in surgery. He first introduced neural networks, which have computational units called “neurons” with metaphorical “dendrites” taking in information and conducting it to other neurons through “axons.” After several layers, the output will be produced. The problem, however, is that these neural networks are not interpretable and cannot be siphoned for their internal workings. That being said, machine learning can be extremely powerful, specifically because it can use structured and unstructured data in tandem. There was a 2016 study that showed a machine learning algorithm predicting colorectal surgical complications. With only the predictor variable of frequent words inpatient records, it got an area under the curve (AUC) of 0.83—an AUC of 1 is the best. With blood tests alone, AUC was 0.74, and, with vital sign data alone, the AUC was 0.65. When these three predictor variables were all combined, however, the AUC became 0.96, which is really quite outstanding and spoke to the power and flexibility of machine learning to use numerous heterogeneous variables.

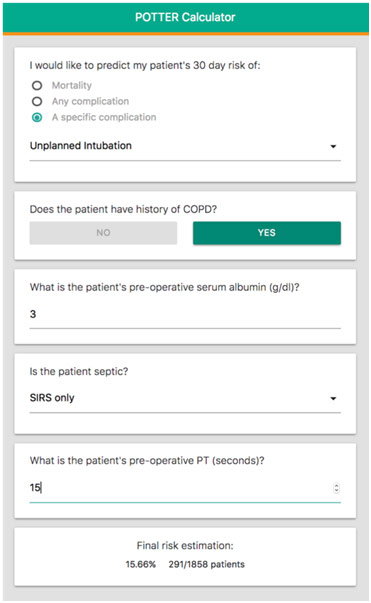

Machine learning algorithms also have the ability to capture nonlinear relationships in a way that traditional risk-stratification models cannot. Algorithms using optimal classification trees (decision trees) have actually been implemented in a smartphone app called POTTER (Predictive OpTimal Trees in Emergency Surgery), which helps predict a patient’s 30-day risk of mortality, any complication, or a specific complication with about four questions, offering a percentage (eg. 15.66%) with the proportion of other patients who had a similar case (eg. 291/1858 patients). While POTTER is highly effective, Dr. Hechi suggested that AI’s impact on medicine is stunted because it is not clear if these models are actually transitioning to clinical practice and making a difference. While surgeons will always remain the ultimate decision-maker and communicator with patients, Dr. Hechi suggested that AI must also have a role in medicine.

POTTER in Action

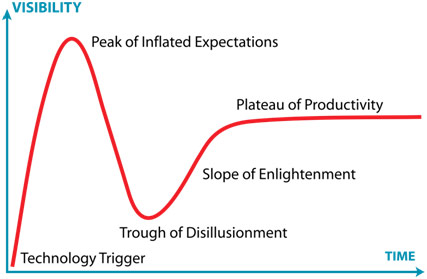

Dr. Thomas Ward from the Surgical Artificial Intelligence Laboratory (SAIL) at Massachusetts General focused more on computer vision where an algorithm actually sees the data and understands it. However, Dr. Ward cautioned that machine learning, like all other technology, will follow Gartner’s Hype Cycle where there is a peak of inflated expectations that gives way to a trough of disillusionment. There is a recovery with the slope of enlightenment, which ultimately leads to a plateau of productivity. Right now, computer vision is at the peak of inflated expectations with a machine learning algorithm acting as a 24/7 radiologist with an 89% sensitivity for diagnosing pneumothorax. Another algorithm can diagnose a picture of a lesion better than a board-certified dermatologist. Dr. Ward contended that we are about to hit a trough of disillusionment though because the machine learning algorithm is not necessarily learning what we think it is learning. For example, in a majority of pneumothorax films, there is a chest tube in the patient, so the algorithm may be simply learning that there is a pneumothorax if there is a chest tube present, which is not always applicable. Similarly, dermatologists place a ruler next to a lesion if they think the lesion is malignant: 30% of images thus have the ruler sitting beside the lesion. Thus, the algorithm may be just learning that, if the image has a ruler, the lesion is malignant. The algorithm is not doing anything novel because that is something the dermatologist already knows.

In another application of computer vision, Dr. Ward spoke about how annotating surgical videos to inform the computer what is going on at each step could create a bright future of intraoperative guidance where the computer is predicting and suggesting the next step during surgery. Here too are their issues because the community is pulling annotations out of electronic medical records, which are generally not the most reliable data and subject to the garbage-in-garbage-out problem. Indeed, 47.5% of operative notes fail to adequately describe operative events. You also cannot shift responsibility for annotating the video to the computer because one experiment showed an algorithm mistaking a red stop sign with four stickers on it for a white 45 mph speed limit sign. Furthermore, while Google farms out identification labor out to the public with the reCAPTCHA tests, you obviously cannot farm out surgical annotation labor to the public, but there are too few surgeons to do it. On top of that, like with the algorithms for pneumothorax and lesions, there is a fundamental database issue for surgical annotations. In one popular laparoscopic cholecystectomy surgical video database, they pull the bag in and pull the scope out, which is a unique organizational quirk rather than a widely used step. Dr. Ward emphasized that we need well-curated datasets that offer a representative sampling of the population: we need a variety of difficulties (not just easy cases), more geographic diversity (not just one center’s or one country’s cases), and more.

The Hype Cycle

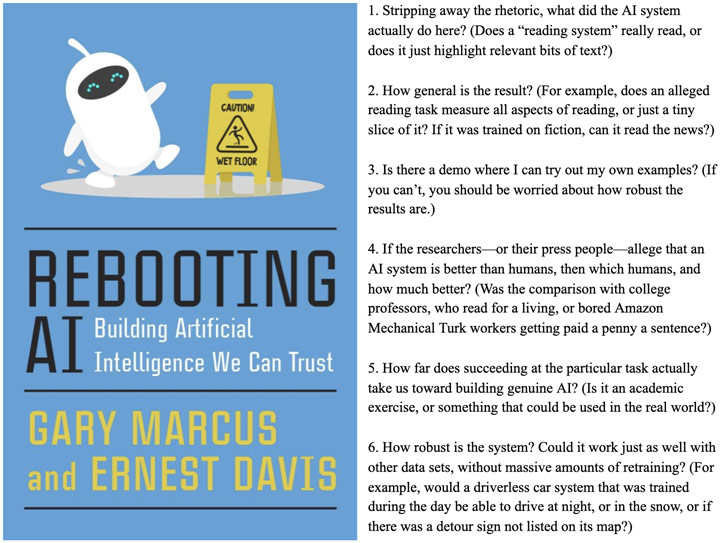

University of Vermont’s Dr. Gary An then gave a fascinating presentation on the negative aspect of AI, bringing all the spectacular expectations back to reality. He first began by addressing the appeal of AI: it offers shortcuts to doing things the hard way, giving us results like magic. If you get enough data and use clever algorithms, you will get an answer; you do not need to think anymore because the AI has done it for you. The problem here is that we have unrealistic expectations. For one, there are inherent problems with the data that we must supply to the AI. With data, what you choose to look for determines what you will find, instead of finding what is true. Further, data is only retrospective (bound by history), and data can describe but it cannot tell you something new because all the information it can connect must already be in the dataset. One algorithm found that asthma actually lowers the risk of pneumonia when it is actually that asthma patients are more likely to be admitted to the ICU where care was better: the algorithms are blind to confounders not coded in the data set. On top of that, scientists often train their machine learning algorithms with an 80/20 database split (80% training, 20% testing), but this methodology is rooted in internal validation alone, which means very little. After all, you are testing the algorithm on data that it has already trained on, so, of course, the algorithm is going to be spectacular. The algorithm, however, cannot generalize or apply its knowledge to other contexts because all it knows is from this one isolated database.

Thus, how much trust are you going to give to a number the algorithm spits out, knowing that its quality will certainly degrade once you put it out into the wild? How many misses are tolerable? If there is no explainability for the AI, who takes blame for the errors it will certainly make? Dr. An declared that these algorithms will need ongoing feedback but challenged the idea that would truly happen because people get lazy. As Tesla owners fall asleep at the wheel when their car is driving itself, radiologists may also get lazy and agree with the AI’s diagnosis instead of critically examining each x-ray. AI only offers this “faux objectivity” as a result because the way the numbers are generated is an artifact of bias in the data. Dr. An emphasized that we need to be skeptical about claims about AI and machine learning and use it, for now, only as a research aid and non-critical filter for process improvement. He likened this all to releasing surgical trainees into the real world: they are not perfect because they have trained only on their institution’s data, but they can get better.

Skeptical Questions to Ask Any AI Project

Attending ASC was a spectacular experience for me to learn more about medicine at the highest level through informative sessions filled with impactful presentations. It was such a privilege to be able to attend this national surgical conference as a high school student, and I truly enjoyed every moment of it.

References

Academic Surgical Congress: Celebrating 15 Years. Society of University Surgeons, www.susweb.org/2019/06/26/2020-asc-call-for-abstracts/. Accessed 1 Mar. 2020.

Health Care App: POTTER. Interpretable AI, www.interpretable.ai/solutions.html. Accessed 1 Mar. 2020.

Kemp, Jeremy. Hype Cycle. Wikipedia, en.wikipedia.org/wiki/Hype_cycle. Accessed 1 Mar. 2020.

Rebooting AI: Building Artificial Intelligence We Can Trust. ZDnet, www.zdnet.com/article/for-a-more-dangerous-age-a-new-critique-of-ai/. Accessed 1 Mar. 2020.

Sass, Christine. 15th Annual Academic Surgical Congress. 9 Oct. 2019. Association for Academic Surgery, https://www.aasurg.org/future-meeting/academic-surgical-congress/save-the-date-2020/, www.aasurg.org/future-meeting/academic-surgical-congress/save-the-date-2020/. Accessed 1 Mar. 2020.